02

JanYear End Sale : Get Upto 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

Data Processing happens when data is gathered and converted into usable data. Typically performed by data scientists or groups of data scientists, it is significant for information preparing to be done accurately as not to contrarily influence the finished result or information yield.

Six Data Processing Steps:

As we know, approximately 80% of real-world data is unstructured or unorganized. These data are mostly inconsistent, lacks similar behaviour or pattern, incomplete and contain many errors.

Preprocessing of data is a well-known data mining technique that converts raw or unstructured data into a meaningful or understandable format. Data preprocessing is a basic unit of meaningful data analysis. It is one of the most important stages of machine learning projects. Data preprocessing is mostly used in database-driven applications.

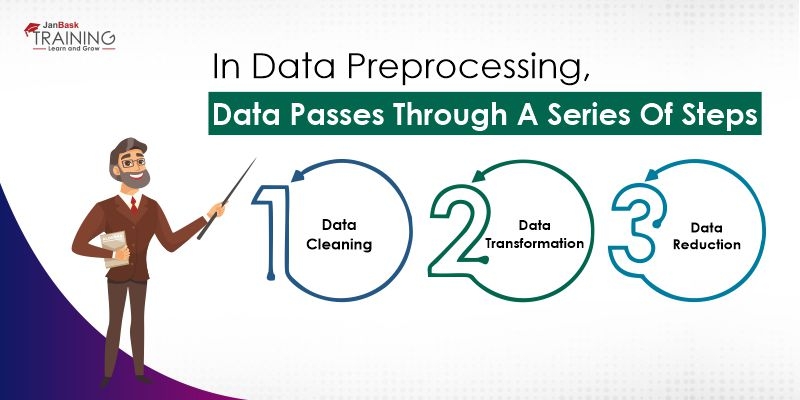

In data preprocessing, data passes through a series of steps:

Read: How Comparison of Two Populations Data look like?

Data Standardization is information preparing the work process those changes over the structure of dissimilar datasets into a Common Data Format. As a component of the Data Preparation field, Data Standardization manages the change of datasets after the information is pulled from source frameworks and before it's stacked into target frameworks. Hence, Data Standardization can likewise be thought of as the change rules motor in Data Exchange tasks.

Data Standardization empowers the information customer to investigate and utilize information in a reliable way. Ordinarily, when information is made and put away in the source framework, it's organized with a certain goal in mind that is regularly obscure to the information customer.

The need for data normalization is required when we are dealing with attributes on different scales.

Data normalization is used for mapping data attributes so that it falls under the lower range. At the point when various qualities are there yet characteristics have values on various scales, this may prompt poor information models while performing information mining tasks. So they are standardized to welcome all the traits on a similar scale.

Data Cleaning is a process by which it guarantees that your information is right, reliable and useable. Cleaned data is more important than using sophisticated algorithms because even simple algorithms can show amazing results on clean data.

It involves two steps:

The idea of missing qualities is critical to understand to effectively oversee information. On the off chance that the missing qualities are not taken care of appropriately by the analyst, at that point he/she may wind up drawing an off-base derivation about the information. Because of ill-advised taking care of, the outcome got by the scientist will contrast from ones where the missing qualities are available.

Read: Probabilistic Model-Based Clustering in Data Mining

Randomly missing values is of two types:

Imputation:

In statistics, imputation plays a major role. Imputation involves the replacement of missing data with arbitrary values. The leads to three major problems in statistics:

Outliers in data mining.

An outlier is an object digresses essentially from the remainder of the object. The occurrence of an outlier is caused by measurement or execution error and the process of analyzing outlier data is referred to as outlier analysis or outlier mining.

What is the need for outlier analysis?

Many of the data mining methods do not usually focus on outliers but some applications such as fraud detections can be more interesting by using outliers.

Read: Top 15 Data Mining Applications: Real-World Use Cases & Benefits

Detecting Outlier:

Threshold value must be initialized before the detection of outliers such distance of any data point is greater than the distance from its nearest cluster identifies it as an outlier.

Steps:

In today's world of so much of economic exchanges and so much of change in science and technology, now a day’s companies change their working style to remain in the competition. As a large amount of data is present in the world, we need equipped ways to handle this data, this large amount of data is known as 'Big Data'. For this Big Data, we need proper processing of data so that the data can be used for various business purposes. So, data must be explored properly and must be used properly to understand the meaning and to identify the relationship between the data and data models to explain their behaviour. We need to have the right processing method to analyze data for the best results.

Please leave query and comments in comment section.

Pinterest

Pinterest

Email

Email

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Introduction to Regression Analysis & Its Approaches

![]() 5.2k

5.2k

A Detailed & Easy Explanation of Smoothing Methods

![]() 7.2k

7.2k

How to Work with Regression based Models?

![]() 5.4k

5.4k

Learn Data Science Seamlessly: Tips to Elevate Your Learning Curve

![]() 4.9k

4.9k

Top 15 Companies Hiring for Data Science Positions in 2025 – Explore Job Opportunities

![]() 1.9k

1.9k

Receive Latest Materials and Offers on Data Science Course

Interviews