10

JanYear End Sale : Get Upto 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

In this Docker tutorial, I will try to make You understand what is docker? Why use it? What are containers and what are docker images? and in the end, will come to know about compose and swarm.

So, let's begin with - what is docker?

Docker is capable of building and running our application in tiny lightweight containers.

All the libraries that are used by the application are compacted in tiny containers and then they become OS independent.

Dockers are considered as the options against VM and often have advantages over VM. Let us check out those in this docker tutorial.

Released on March 20, 2013, it has seen a tremendous increase in popularity and usage in a short duration.

All the big cloud providers now back it up such as AWS, Azure, and GCP which now provide support to docker on their respective platforms and many customer-centric

products are running on these cloud providers where the customer application facing a lot of traffic is running on these dockers.

Dockerhub has a huge collection and is also the largest source of docker images. It contains more than 1.5 million docker images for free.

developers use containers for deploying their application on a machine having any Linux flavor, that is other than the parent machine of the application code.

Virtual machines use the resources of the host machine but are represented as a separate machine.

All Virtual machines have their OS.

Virtual machines use their separate OS and memory. The virtual machine uses the resources in isolation hence running many OS in parallel.

Using containers increases the outcome of the application and minimizes its size.

Virtual machines use a separate OS for itself, on top of the host OS, hence they are very heavy in size but containers use resources of host OS, hence light in weight.

3 virtual machines mean 3 different OS installed.

Docker containers lack security as compared to the virtual machines as all the containers share the same OS kernel, but virtual machines have a different OS kernel among themselves.

Docker containers are portable as they all use the same OS, but the virtual machines are not portable as they all use different operating systems.

CNF - Image like https://geekflare.com/wp-content/uploads/2019/09/traditional-vs-new-gen.png

Monolithic is the software development approach where all the interworking separate components were clubbed as one application and treated as one.

say for example we have an e-commerce website, which consists of different modules like user interface, product inventory, authorize user, authorize payments, and then delivery.

These modules are compacted and clubbed as a single application in a monolithic architecture.

DevOps Training & Certification Course

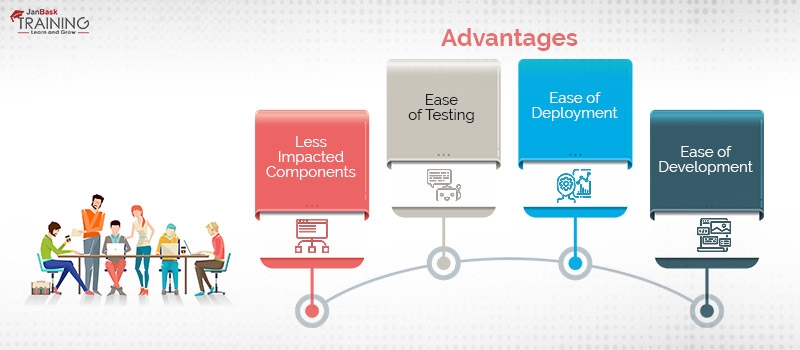

In this architecture, the applications were considered as a single entity clubbing all its components as one. They usually consist of the client, server, and database. Hence all the requests are processed at a single place. The disadvantage of this approach was a common code base, and to make a component change means the whole application was supposed to be built again.

CNF - Image like

https://s3-eu-west-1.amazonaws.com/img3.n-ix.com/wp-content/uploads/2018/10/01181553/25-facts-about-outsourced-software-engineering-in-Eastern-Europe-021.jpg

Due to its compact nature, components like logs and monitoring, if required were modified as a whole application and not as many distributed components, this made the life of developers easy to handle the changes as one component.

As monolithic applications are considered as a single entity hence it was easy to test them as compared to the microservice application.

As monolithic applications are a single entity, hence any change made produced a single file or directory of the application and it was only needed to be deployed again and again.

As all developers work on a common code base, it is easy to develop applications using a monolithic approach.

As the code base increases, the complexity increases.

As the changes and new features are introduced, it becomes difficult to manage as it affects the complete application, and also the development time increases.

It is difficult to implement new technology to a component as the complete code base needs to be changed.

In contrast to the monolithic approach where the application is a single entity, in microservices, the application is broken down into multiple modules. Here each module serves a separate purpose and has its separate database.

CNF Image: https://s3-eu-west-1.amazonaws.com/img3.n-ix.com/wp-content/uploads/2018/10/01181617/25-facts-about-outsourced-software-engineering-in-Eastern-Europe-03.jpg

As stated above, all modules in the application are individual components, which makes development an easy process and easy to troubleshoot in case a bug arrives, as only the faulty module is rechecked.

As the code is broken down in small modules, new features can be introduced at regular intervals.

In high traffic or better performance conditions, only the responsible component can be scaled up or down and no need to unnecessarily scale all the components as in the monolithic approach.

So I am sure, reading this docker tutorial until now must have given you some basic idea about the History Before containerization and how microservices have changed the way development was done.

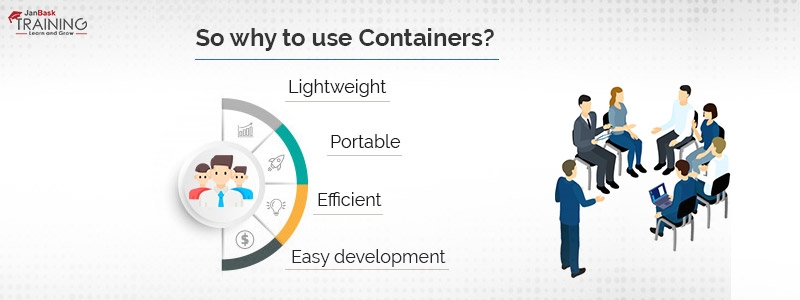

Let’s understand in detail why we should use containers.?

Containers are small units that are used for running applications. They do not contain any OS and use the host OS.

As we know that containers do not contain any OS and just have the required libraries and resources to run the application, they are light in weight and use the host OS.

Containers and their contained applications can be deployed on any OS or hardware.

Applications are easily deployed and can be upgraded, reducing the time to market.

As the application is broken down as modules, each developer just needs to focus on its components and all such components can run in a container cluster to work as a complete application.

DevOps Training & Certification Course

In this docker tutorial let’s understand some more components :

Dockerfile is a text file that contains the set of instructions for the Docker.

Docker uses these instructions to build an image. Build command is used to create an image from this Dockerfile. These instructions are the set of commands that are run in order when a container is created from this image. Each line in a docker file is considered as a separate layer.

FROM alpine

WORKDIR /my work

COPY . /mywork

RUN apt-get -y update && apt-get install -y wget

CMD echo hello

A set of commands or instructions that are executed to start a container is called an image.

It is a collection of files that have no state.

This is in a read-only format. An Image consists of a list of commands that are executed in sequence when a container is formed out of it. You can use docker images to install applications that run within a container and pass the commands to them even at run time.

Docker container is a package that contains the application code and libraries that are required to run the application. Containers also make the application environment independent. Docker images when executed are called Docker containers.

In this docker, tutorial let's further discuss other important tools provided by docker.

Till now we have seen how to run a single container but, even multiple container applications can be run.

This can be done with the help of docker-compose.

It helps us to define and run multiple containers as a single service.

It uses a YAML file for configuring the application. We then use this YAML file to start the application and all its services.

With the use of docker-compose, we can set up a complete environment to run our applications and also bring it down easily.

It separates the environment with the use of different project names.

Remember: When a container is brought down the data in it is lost. To avoid this situation user-specific volumes are the best solution.

Hosts that contains many masters and worker nodes is called a docker swarm. Here master node is responsible for managing the worker nodes.

In case a worker node goes down all tasks performed by it are transferred to another healthy node. This group of hosts is called a cluster.

Master and many worker clusters are handled by docker swarm.

Scaling up and down of tasks are done easily.

In Swarm, manager ingress exposes services to the outer world.

Services are assigned a port number, using which it can be accessed from the outside world.

An internal DNS is responsible for assigning the services an entry.

DevOps Training & Certification Course

Pinterest

Pinterest

Email

Email

A DevOps Engineer by profession and a Technical Blogger by passion. I love to learn anything about DevOps and Cloud computing and at the same time share the knowledge among those who are willing to learn and share the same passion with me.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on DevOps Course

Interviews