02

JanChristmas Offer : Get Flat 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

Hadoop is Java-based distributed processing framework, which is used to process and store huge amount of structured or unstructured data and this data is stored on commodity hardware. Hadoop has given a big platform to the organizations, on which they can increase their processing power and handle boundless data. This open source software project is used in the organizations, which have huge amounts of data to analyze both structured and unstructured forms. The best example of the organizations, which use Hadoop is the banks, which need to analyze millions of transactions to draw patterns, while social networks may need to analyze billions of events and the ad-networks may need to analyze millions of clicks to draw any pattern.

This article discusses what Hadoop is, the components of Hadoop and whyone should learn Hadoop or the scope of Hadoop.

As discussed above Hadoop is open source software, which is used to analyze a huge amount of data. Today vast amount of data is available due to social network and for the legacy system; it is quite difficult to analyze this data. Hadoop provides a core platform to structure the data so that it can be analyzed easily. Even before the advent of Big Data Hadoop the data storage was also expensive. Gartner and IBM defined Hadoop as:

Read: Hadoop Developer And Architect: Roles and Responsibilities

Gartner defined Hadoop as “Big Data Hadoop is a Hadoop file system, some utilities, and MapReduce”. MervAdrian defined Hadoop as:

According to IBM, Hadoop is defined as “Hadoop is a software project, which enables distributed processing of large data sets across clusters of commodity servers and has a very high degree of fault tolerance. The failures and defects can be detected at the application layer in Hadoop”

In Hadoop the single server can be scaled up to numerous servers and thousands of machines, these servers can offer local computation and storage and the basic concepts of Hadoop are listed below:

Read: Big Data Hadoop Developer Career Path & Future Scope

Among above-listed concepts, MapReduce and HDFS are two basic and essential components of Hadoop. Hadoop is used in various sectors including financial, healthcare, education and many others. Across the globe, the companies have started to migrate the data to Hadoop to increase their efficiency and storage capacity.Following are a few most important characteristics of Hadoop:

These features make Hadoop a system, which is the most beneficial and can be used by any organization to handle unstructured data efficiently. The data can be structured using Hadoop tools and therefore can also be utilized for the analysis so that any decision can be made using the data. Other benefits of Hadoop include stability, certainty, accuracy and the decision making power to the managers of the organization.

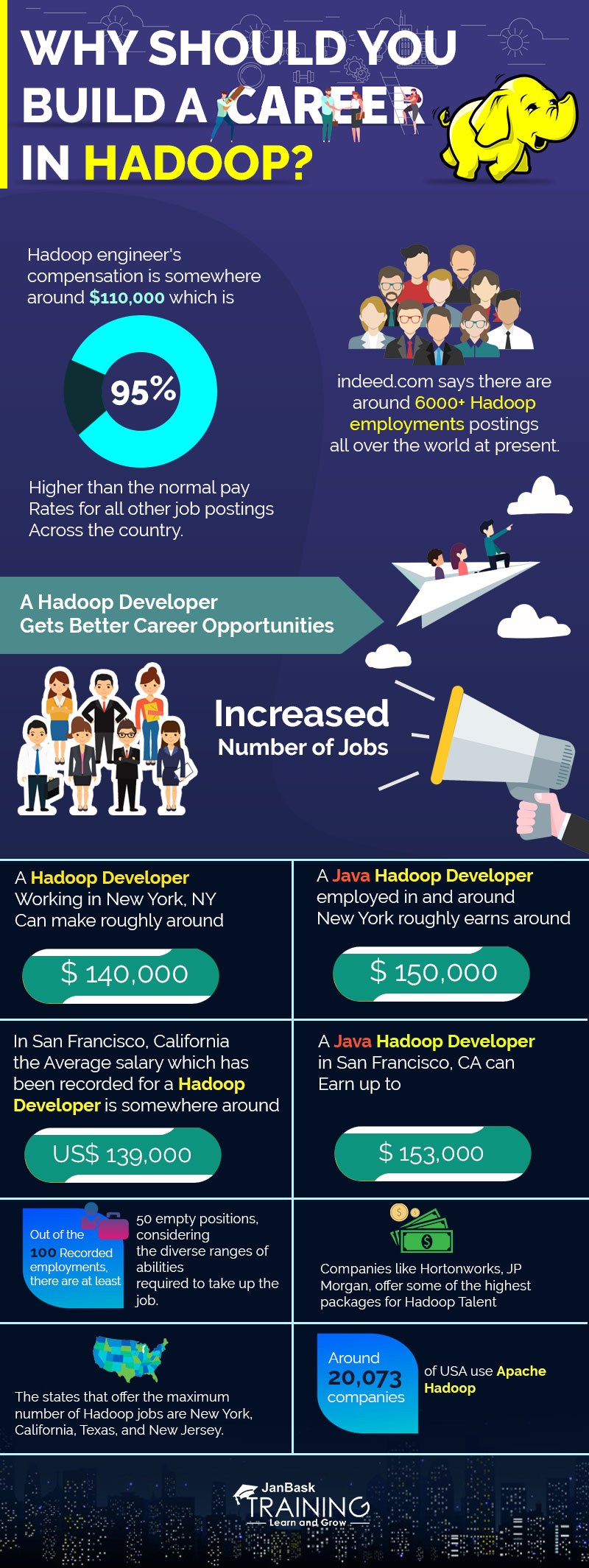

At an affordable price, the organizations can store their data with the help of Hadoop. Limitless amount of unstructured data can be processed with the help of Hadoop tools easily and quickly. Nowadays a number of organizations are using Hadoop for data processing and analysis and as a result, the demand for trained and experienced Hadoop professionals has also been increased and it will soon become a must have the skill for the organizations, which are deeply involved in data or big data.  Hadoop is an emerging skill and the companies involved in the huge amount of data operations are hiring trained Hadoop professionals. The demand for such professionals is increasing day by day and therefore the professionals are most in-demand in the IT sector. Learning Hadoop can be most advantageous. The use cases of Hadoop are also increasing day by day. Those who want to build their career in IT sector learning Hadoop can provide massive career opportunities for them and long-lasting career as well. The main considerable reasons to learn Hadoop are following:

Hadoop is an emerging skill and the companies involved in the huge amount of data operations are hiring trained Hadoop professionals. The demand for such professionals is increasing day by day and therefore the professionals are most in-demand in the IT sector. Learning Hadoop can be most advantageous. The use cases of Hadoop are also increasing day by day. Those who want to build their career in IT sector learning Hadoop can provide massive career opportunities for them and long-lasting career as well. The main considerable reasons to learn Hadoop are following:

Read: What is Flume? Apache Flume Tutorial Guide For Beginners

Final Words:

The accelerating growth of big data professionals is creating a big room for both the professionals and the business owners. Hadoop is emerging as an efficient technology for data processing and is exponentially growing. The career opportunities are also increasing for the Hadoop professionals.

Related Articles

Read: Salary Structure of Big Data Hadoop Developer & Administrator

Pinterest

Pinterest

Email

Email

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on Hadoop Course

Interviews