When you start learning something entirely new, you learn really fast depending upon several steps or levels of learning. It takes a few hours to hit the beginner’s level. From beginner to intermediate level, it takes days. And finally, from intermediate to expert level, it takes months. But learning never ends. You keep on learning the new things till your last breath. In this write-up, we are going to have a closer look at the best learning strategy to learn Hadoop quickly.

Who can learn Hadoop?

If you are planning to make your career in Hadoop, make sure you fulfill the following criteria. Hadoop can be done by: -

- Managers who are looking forward to implementing the latest technologies in their work environment.

- A graduate and postgraduate who is looking for an aspiring career in the high-end technologies of information technology.

- Software engineers who are int ETL/Programming and discovering great opportunities

What does it take to be a Hadoop expert?

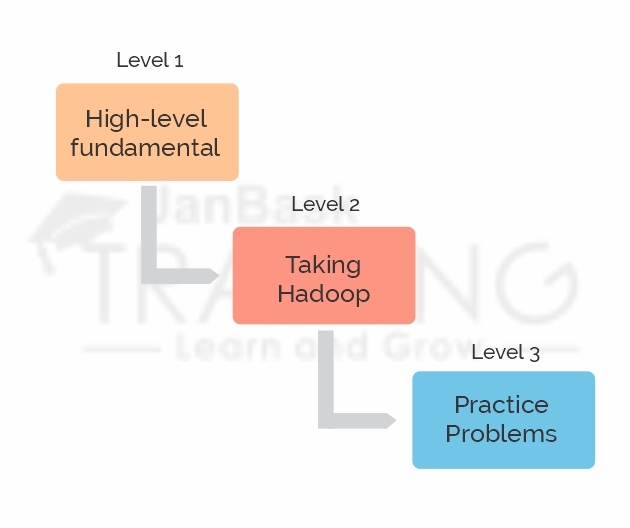

The best learning strategy that we would like to expose here to learn Hadoop quickly is to follow the 3-step learning method of Hadoop.

Learning Strategy to learn Hadoop quickly

- Learning Java: - Start your Hadoop learning from Java. Java is the base of Big Data Hadoop. Though Java is a programming language and Hadoop is an open-source framework which is written in Java programming language. Hadoop framework can be coded in any language, but still, Java is preferred. For Hadoop, the knowledge of Core Java is sufficient, and it will take approximately 5-9 months.

- Learning Linux operating system: - It is recommended to have a basic understanding and working of the Linux operating system. Linux is used for the installation of Hadoop framework. If you have the prior understanding of Linux, then it would be easier for you to install the Hadoop framework in a few steps. For this, mark at least a month.

- Learning Database: - Fundamental knowledge of SQL is a must for learning Hadoop. You must be experienced with SQL commands and queries because Hadoop grasps and processes a large amount of data and various components of the Hadoop ecosystem.

Summary of the learning objectives

- You should know your purpose of learning Hadoop very well

- Know about Hadoop’s popularity, its need in the market and scope, and then begin your learning

- Hadoop is a vast area, so try to learn about its various components, their applications

- It is always healthy to refer some books for learning, and so, in this case, you may also refer several books like Hadoop for dummies, Hadoop- a definitive Guide and many more books

- Follow popular blog writers of Hadoop, so that you will get updates in the technology in proper time

- Your ultimate goal behind learning Hadoop is to get placed in the Hadoop industry, and for that, I would suggest you take certification courses that will help you in mastering Hadoop and also will provide you a better direction or guidance in the field

Step 0: Programming skills

- Python It is a high-level programming language which is used to create web-server applications. Although Python is not at all tough to learn or time taking.

- Scala It is a general-purpose programming language which combines object-oriented and functional programming in one concise. It is designed to be concise. The difficulty level of learning Scala is medium.

- Java It is also a general-purpose programming language which is based upon the concepts of class and objects and has a few implementation dependencies as much as possible.

- C++ Learning C++ is really the fundamental base of any of the programming language because of the object-oriented concepts use in it.

Step 1: Database skills

- Relational Database It is a digital database which is used to manage database systems visually. It makes use of SQL.

- MongoDB (or NoSQL) It is a cross-platform to manage object-oriented database program.

Step 2: Analytical skills

- Problem-Solving Big Data Hadoop surrounds problem-solving, you need to be easy-going with this skill

- Statistics Hadoop involves calculations and mathematical skills for the analysis of data.

- Quantitate Analysis While working with Hadoop; you must also be working with exact data and figures.

Step 3: Cloud skills

- Google cloud platform Just to understand the working, to store, or to manipulate data on the cloud

Step 4: Data warehousing

- Hive Apache Hive is a data warehouse project built on the top of Apache Hadoop which provides analysis of SQL and Apache Hadoop for providing data query and analysis.

- PostgreSQL It is a DBMS tool used for emphasizing extensibility and technical standards compliance

- Apache Spark It provides an interface for entire programming clusters with implicit data parallelism and fault tolerance.

- MapReduce It is a programming model which is efficient in processing large volumes of data.

- HDFS Hadoop Distributed File System is used to run on commodity hardware. It is the primary storage system of Hadoop.

Based upon the platform, you choose to learn Hadoop

Self-Learning Platform for Hadoop

If you choose the self-learning method to learn Hadoop, then it will take approximately 3-4 months. Because you have to Google your queries every time and have to answer them on your own. You are your teacher.

Read: Key Features & Components Of Spark Architecture

Hadoop Training from Industry experts

If you opt for training as the majority of people do, then you can learn Hadoop in merely 2-3 months. You can take up a certificate-based training which can leverage your skills as well as a resume at the phenomenal rate.

Overall, what you will be doing in this time span of learning-

- A mixture of studying (different platforms)

- Understanding Hadoop

- Solving practical problems

- Implementation of Hadoop

Hadoop as your first technology or second

If you are planning to learn Hadoop as your first programming language, then think twice. Although there are no specific pre-requisites to learn Hadoop, still you must be proficient in Java, Linux, and SQL languages. So indirectly, Hadoop alone cannot be your first programming language. You need the fundamental knowledge of Object-Oriented Programming to ease your Hadoop learning.

In short, learning all the basic level languages (Java), Database concepts (SQL), operating system learning (Linux), practicing programming and the other concepts, Hadoop learning will take at least one year.

We do understand your zeal to learn Hadoop, that’s why we have made an effort to lessen your time span of learning Hadoop. You can join six weeks Hadoop training at JanBask Training to accomplish your dreams to become Data Scientist in a shorter period.

Read: Pig Vs Hive: Difference Two Key Components of Hadoop Big Data

Hadoop topics to learn

- Introduction to Big data and Hadoop Ecosystem

- HDFS and YARN

- MapReduce and Sqoop

- Basics of Hive and Impala

- Working with Hive and Impala

- Types of Data Formats

- Advanced Hive concepts and data file partitioning

- Apache flume and HBase

- Pig

- Basics of Apache Spark

- RDDS in Spark

- Implementation of Spark Applications

- Spark Parallel Processing

- Spark RDD Optimization techniques

- Spark Algorithm

- Spark SQL

- Apache Hadoop file storage

- Distributed processing on an Apache Hadoop cluster

- Aggregating Data with Pair RDDS

- Querying tables and views with Apache Spark SQL

- Distributed processing

- Common patterns in Apache Spark Data Processing

- Working with DataFrames and Schemes

- Apache Spark Streaming: Processing many batches

- Analyzing Data with DataFrame Queries

- Writing, Configuring and Running Apache Spark Applications

- How to use Spark SQL to query data

- How to do real-time processing using spark streaming

- How to create applications with Apache Spark 2

- How to write applications that use core Spark

- How to work with big data from a distributed file system

- How to execute Spark applications on a Hadoop cluster

Objectives of learning Hadoop

- Know how to structure, analyze, and interpret big data

- Know how to solve real-world problems and questions

- Know big data insights with the aid of tools and systems

- Know how to use Hadoop with MapReduce, Spark, Pig, and Hive

- Know how to perform predictive modeling and use graph analytics

Career with Hadoop

This is an undeniable fact that Hadoop skills are in great demand. Hadoop accelerates the growth in a career as well as it will increase your pay package too. Hadoop is a more promising career in the market, and it has the potential to improve job prospects whether you are a fresher or an experienced professional.

Job roles after learning Hadoop

After completing your learning, there are various job roles associated with Hadoop technology: -

- Hadoop Developer

- Hadoop Administrator

- Data Scientist

- Software Developer

- Data Analyst

- Hadoop Architect

- MapReduce Developer

- DevOps

- Data Developer

- Data Security Admin

- Data Visualizer

- Data Architect

As a Hadoop professional, the average salary in the US is $90,950 per year.

Final Words

There are three types of learners: Casual Learners, Career Advancers, and Career Changers.

Read: Salary Structure of Big Data Hadoop Developer & Administrator

Casual Learners pick up the skills as time allows to them. They don’t rush what to learn. They just try out new things.

Career Advancers are the people who know that only skills are going to help them better. They know what to study and take one bite of cookie at a time.

Career hangers are the people who are passionate and goal-oriented. They do consistent learning. They’re more prominently focused on topics.

Out of the three learners, which learner you are?

The more time, the better do what you can! Give 5-15 hours a week to make yourself stand in the community of Hadoop. In the end, it depends upon your own learning pace. Do you want to run a race, a half marathon, or a full marathon? The urgency lies in the intensity of each of these goals on the necessity of an approach in timelines. The success lies in as soon as you want. Happy Learning!

Read: Your Complete Guide to Apache Hive Installation on Ubuntu Linux

FaceBook

FaceBook

Twitter

Twitter

LinkedIn

LinkedIn

Pinterest

Pinterest

Email

Email

Hadoop Course

Upcoming Batches

Trending Courses

Cyber Security

- Introduction to cybersecurity

- Cryptography and Secure Communication

- Cloud Computing Architectural Framework

- Security Architectures and Models

Upcoming Class

1 day 10 Jan 2026

QA

- Introduction and Software Testing

- Software Test Life Cycle

- Automation Testing and API Testing

- Selenium framework development using Testing

Upcoming Class

1 day 10 Jan 2026

Salesforce

- Salesforce Configuration Introduction

- Security & Automation Process

- Sales & Service Cloud

- Apex Programming, SOQL & SOSL

Upcoming Class

6 days 15 Jan 2026

Business Analyst

- BA & Stakeholders Overview

- BPMN, Requirement Elicitation

- BA Tools & Design Documents

- Enterprise Analysis, Agile & Scrum

Upcoming Class

0 day 09 Jan 2026

MS SQL Server

- Introduction & Database Query

- Programming, Indexes & System Functions

- SSIS Package Development Procedures

- SSRS Report Design

Upcoming Class

0 day 09 Jan 2026

Data Science

- Data Science Introduction

- Hadoop and Spark Overview

- Python & Intro to R Programming

- Machine Learning

Upcoming Class

7 days 16 Jan 2026

DevOps

- Intro to DevOps

- GIT and Maven

- Jenkins & Ansible

- Docker and Cloud Computing

Upcoming Class

1 day 10 Jan 2026

Hadoop

- Architecture, HDFS & MapReduce

- Unix Shell & Apache Pig Installation

- HIVE Installation & User-Defined Functions

- SQOOP & Hbase Installation

Upcoming Class

1 day 10 Jan 2026

Python

- Features of Python

- Python Editors and IDEs

- Data types and Variables

- Python File Operation

Upcoming Class

0 day 09 Jan 2026

Artificial Intelligence

- Components of AI

- Categories of Machine Learning

- Recurrent Neural Networks

- Recurrent Neural Networks

Upcoming Class

8 days 17 Jan 2026

Machine Learning

- Introduction to Machine Learning & Python

- Machine Learning: Supervised Learning

- Machine Learning: Unsupervised Learning

Upcoming Class

0 day 09 Jan 2026

Tableau

- Introduction to Tableau Desktop

- Data Transformation Methods

- Configuring tableau server

- Integration with R & Hadoop

Upcoming Class

1 day 10 Jan 2026

Pinterest

Pinterest

Email

Email