02

JanChristmas Offer : Get Flat 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

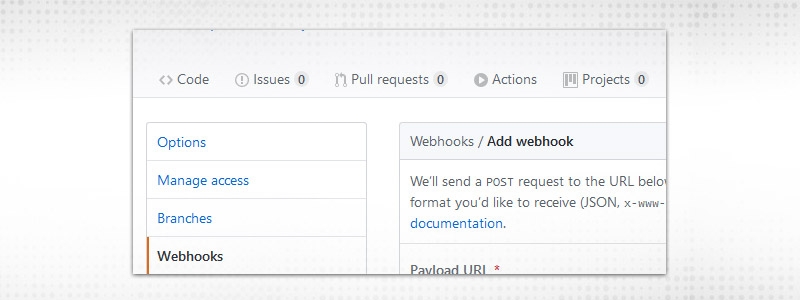

In the last blog we discussed Jenkins configuration settings which will be helpful in managing our job with demo. Now, we will proceed to check how to create Jenkins build jobs. Jenkins build job cannot be triggered manually. Jenkins build job should be triggered automatically for any push or whatever hooks created. We will discuss hooks also in this blog. Jenkins build jobs pipeline will be executed on the basis of these (web)hooks.

So, Let’s start !!

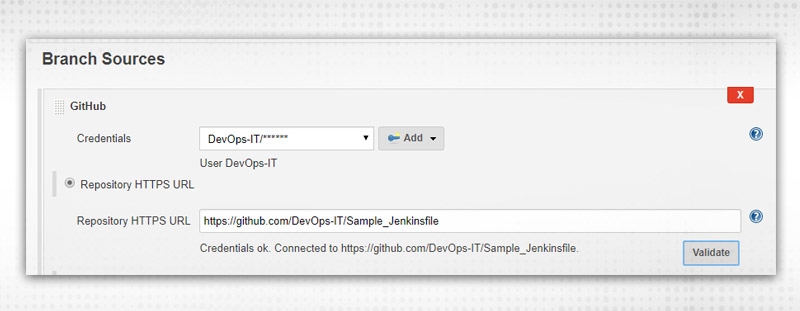

Enter Credentials and Repository URL. Press Validate, to verify if the connection is OK.

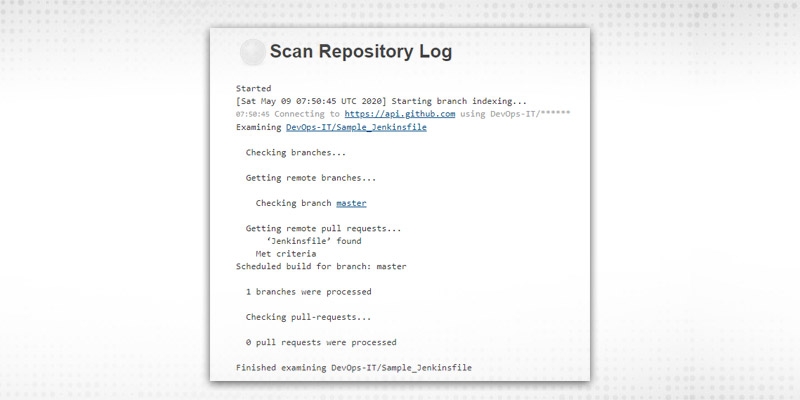

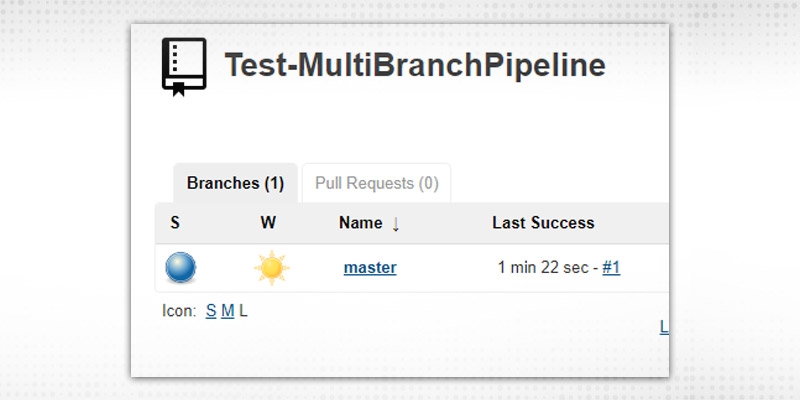

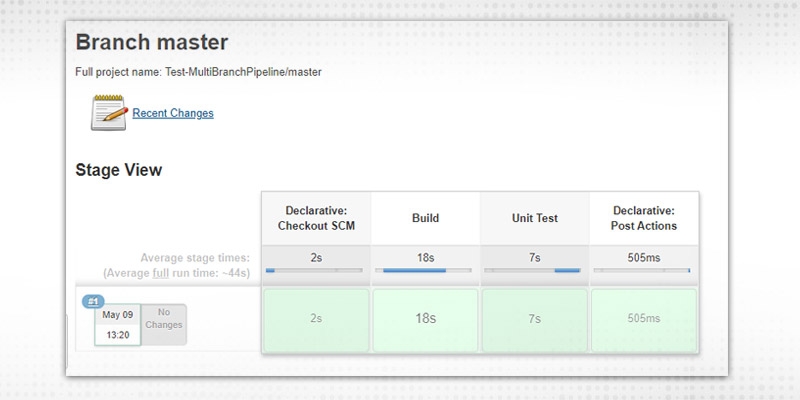

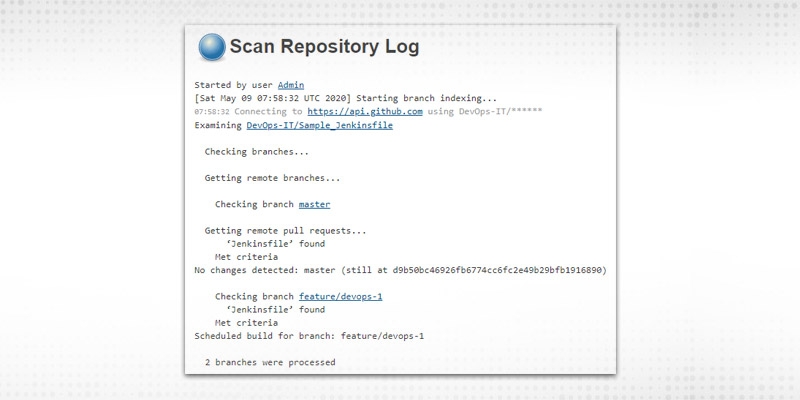

As you can see, only 1 branch is processed, which is master. So, it will create a job with the “branch name” and execute it.

git checkout -b feature/DevOps-1

git push origin feature/DevOps-1

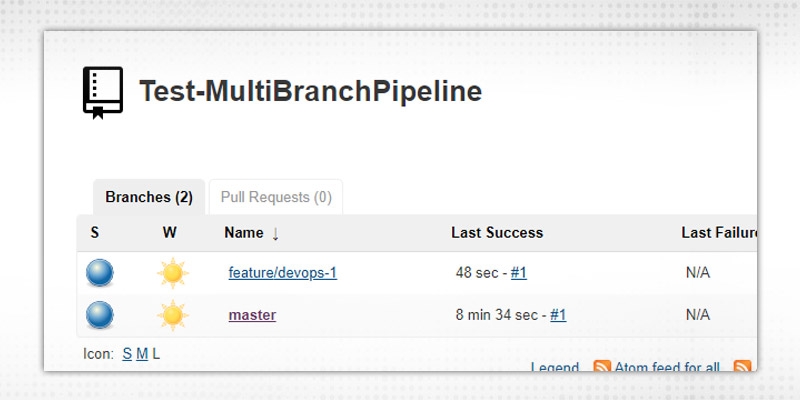

It included a feature branch also now. Check from Job perspective:

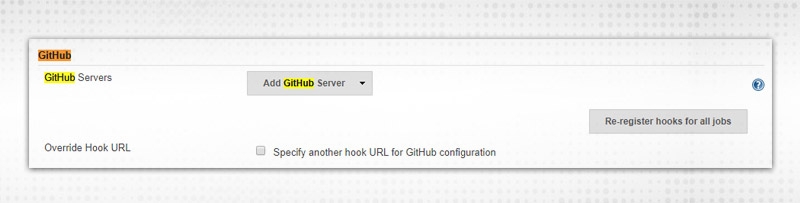

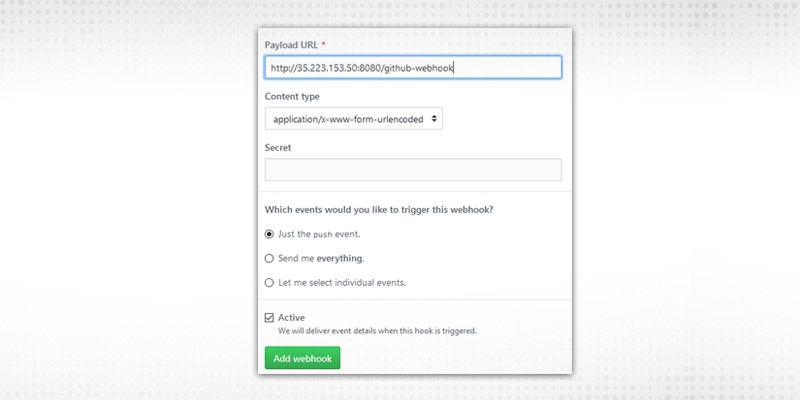

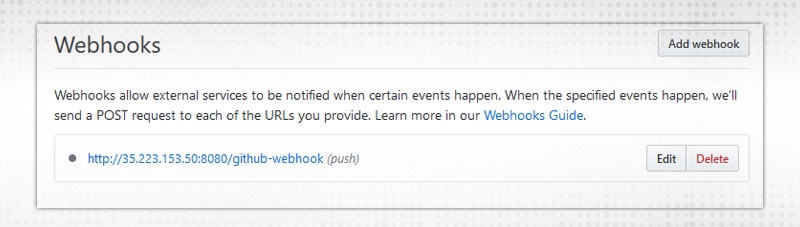

Webhook is created.

Now, the build is configured to trigger automatically.

- As such, there is no pre-block in Jenkins build job pipeline but some of the things we can perform as preconfiguration of the start of pipeline. Some of the basic sections be

etc.

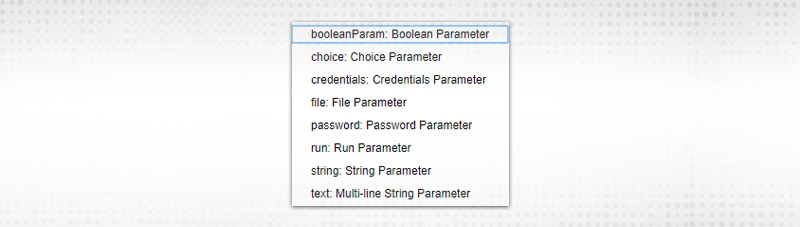

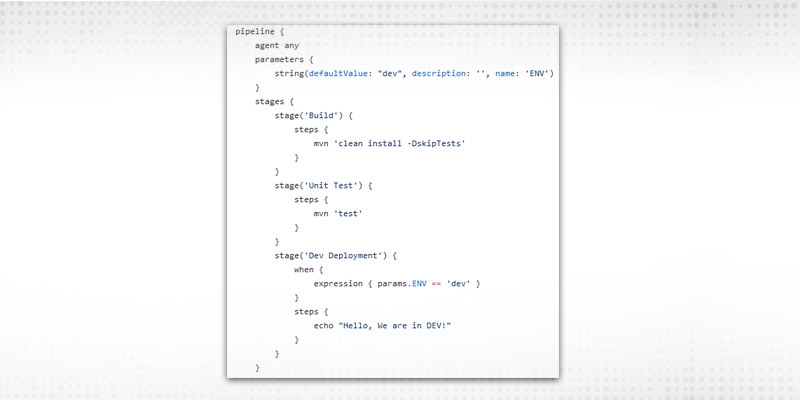

There are multiple scenarios but most common we will discuss here Jenkins build job parameters, known as “Parameterized Pipelines”

e.g.

parameters {

booleanParam defaultValue: false, description: '', name: 'BOOL'

choice choices: ['dev', 'stage'], description: '', name: 'CHOICE'

credentials credential Type: 'com.cloudbees.plugins.credentials.impl.Username->Password->CredentialsImpl', defaultValue: 'a096c812-1543-4328-b3e0-33cc5117045d', description: '', name: 'CRED', required: false

file description: '', name: ''

password default Value: '', description: '', name: 'PASSWD'

run description: '', filter: 'ALL', name: 'RUN', projectName: ''

string defaultValue: '', description: '', name: 'ENV', trim: false

text defaultValue: '', description: '', name: 'MULSTR'

}

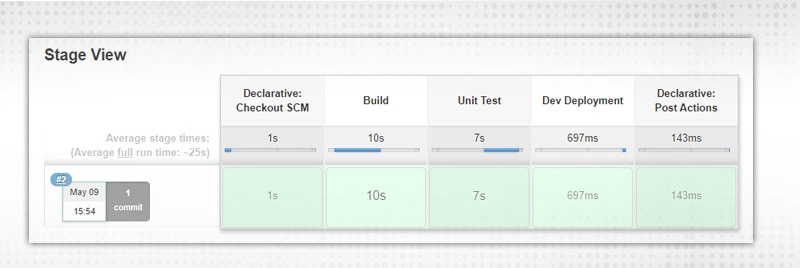

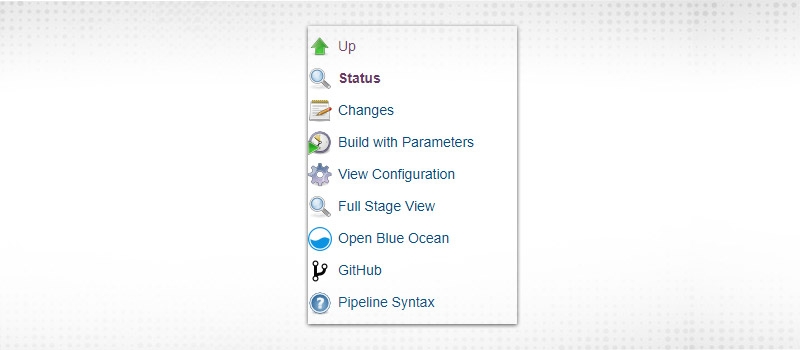

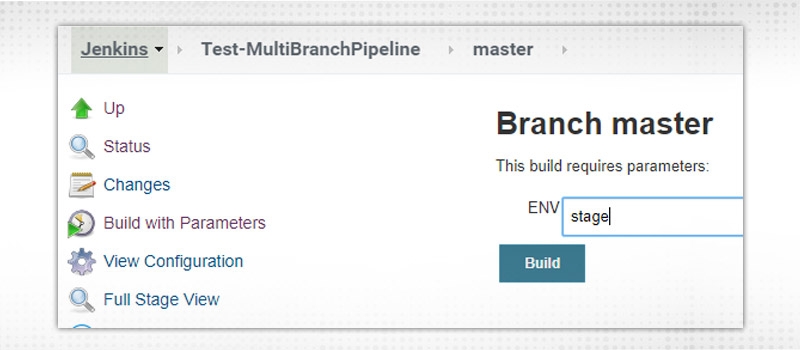

And “Build Now” changed to “Build with Parameters” as now our build require parameters to run:

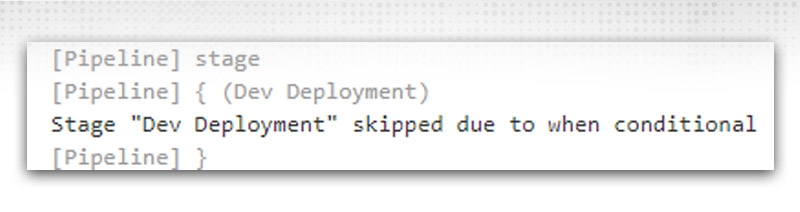

We will check that “Dev deployment” stage will not be executed, see console output:

Stage skipped due to “when” condition

Different blocks specified as per project requirements and complexity. Jenkins build jobs will be using these options to create complex pipelines and to ease our manual efforts.

There are various actions, which need to be performed after completion of all stages or jobs. Some of the prominent conditions to execute post actions are:

Normally, the post section should be placed at the end of the pipeline and it contains steps like in the stage block but depends on the condition defined above. Syntax is somewhat like:

post {

always {

cleanWs()

}

}

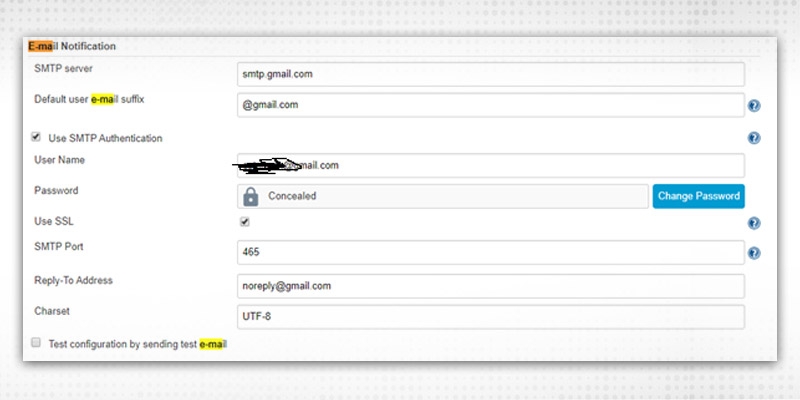

Above Jenkins build job example will always clean up the workspace irrespective of the build result. Generally, notifications and cleanup activities done in post actions. By notification, we can set up the Slack, skype, EMAIL, or Github notification. As per the blog’s perspective, we will discuss setting up EMAIL notifications.

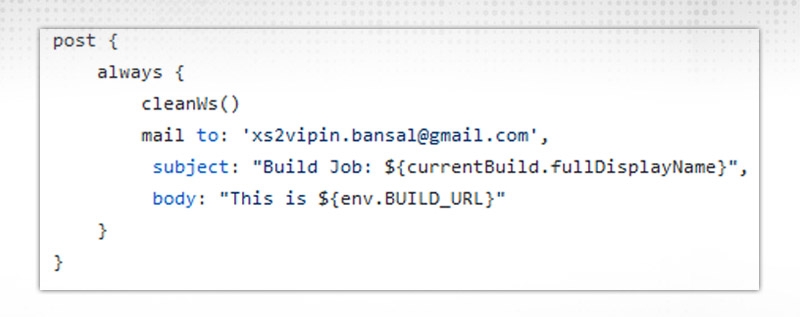

And then specify email notification in Jenkinsfile:

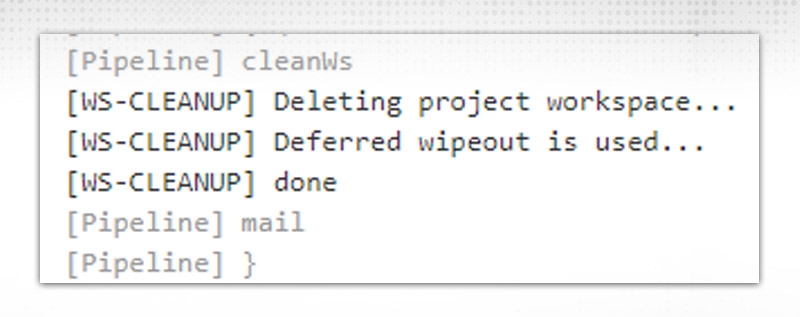

In this post section, we used “always” block which will be executed every time irrespective of build result:

So, when your changes got committed and Jenkins build job executed, it will clean up the workspace and send mail to a respective receiver in the end:

email text body: 'Contents ', recipient providers: [culprits(), developers()], subject: 'Build Job'

These details, Jenkins build jobs will capture from GITHUB commit IDs.

- Similarly, for slack notifications, we have to install the SLACK plugin, configure in Jenkins configure the system and then use it in Jenkins file like below:

post {

success {

slackened channel: '#team-org',

color: 'good',

message: "The pipeline ${currentBuild.fullDisplayName} completed successfully."

}

}

node {

checkout scm }

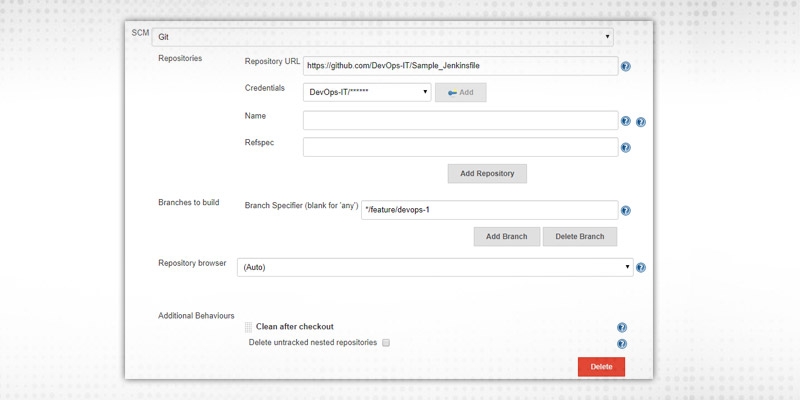

in Jenkinsfile. For some complex behaviour, like if we want to check out some feature branch or we want to do some cleaning after checkout, then normal “checkout” will not work for us. We have to create complex “checkout” options to make this possible.

Suppose, we want to capture this scenario:

Equivalent checkout statement will be:

checkout([$class: 'GitSCM', branches: [[name: '*/feature/devops-1']], doGenerate Submodule Configurations: false, extensions: [[$class: 'CleanCheckout']], submoduleCfg: [], userRemoteConfigs: [[credentialsId: 'a096c812-1543-4328-b3e0-33cc5117045d', url: 'https://github.com/DevOps-IT/Sample_Jenkinsfile']]])

It will checkout feature branches only and we provide a “CleanCheckout” class object to extend its functionality.

Just to summarise, in this blog, we have gone through Jenkins build job example and set up a multibranch pipeline job so that every developer working in their respective branch can test his code if it is breaking or will break after merging or not. We set up a webhook for “push” events, similarly, it can be set up for the creation of pull requests or after when merge will happen to master. It will be effective for any issues during the development phase only. Notifications can be set up to point out the issues and the person responsible for that issue, the notification will notify everyone in real-time instead of waiting for the job to complete.

In the next blog, we will discuss test cases and test scenarios as testing is also a major part of any software process or lifecycle. Unit testing can be easily integrated with Jenkins and is very effective in generating and sharing test reports. So, see you all in the next blog with test cases. Keep continuing your efforts in setting up a multibranch pipeline job and share your feedback.

Pinterest

Pinterest

Email

Email

As an experienced DevOps professional, I am having a good understanding of Change and Configuration Management as well. I like to meet new technical challenges and finding effective solutions to meet the needs of the project. I believe in Sharing is Learning.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on DevOps Course

Interviews