10

JanYear End Sale : Get Upto 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

Year End Sale : Get Upto 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

Kubernetes is one of the most critically acclaimed container orchestration tools that is a buzzword amongst tech professionals because it is easy to learn and has great deployment by smaller to bigger organizations like Google, Shopify, Slack, New York Times, Yahoo, eBay, etc.

Kubernetes was open-sourced in 2014 by Google and ever since then, many companies have started adopting this tool to automate the deployment, scaling, and management of containerized applications in multiple environments.

Companies use Kubernetes because:

Despite the popularity of this tool, companies are still lacking skilled and certified Kubernetes professionals. In such an event, if you have honed this tool via Kubernetes Certification Training online and have a big interview lined up in coming weeks, here are the 50 Kubernetes interview questions and answers for 2025 divided based on 4 categories:

Let’s get started with the top Kubernetes interview questions divided into the above categories, --- one by one!

DevOps Training & Certification Course

This category lists the questions that are asked related to the general working of Kubernetes during the interviews.

Kubernetes is a container orchestration tool that is used for automating container deployment, scaling/descaling, and load balancing in multiple environments. Being the prodigy of Google, the tool has excellent community support in the background and seamlessly supports the cloud-native applications. Kubernetes is not just a containerization platform, it is a multi-container management solution.

It is known that the Docker image builds the runtime containers and these individual containers need to communicate with each other, which is why Kubernetes is used. So Docker builds the containers and the Kubernetes tool helps these containers communicate. The container running on multiple hosts can be easily linked and orchestrated by Kubernetes tools.

|

Parameters |

Kubernetes |

Docker Swarm |

|

GUI |

Kubernetes Dashboard is the GUI |

Has no GUI |

|

Installation & cluster configuration |

Setups are quite complicated but the cluster is robust. |

Setup is easy but the cluster is not robust. |

|

Auto-scaling |

Can do auto-scaling. |

Cannot do auto-scaling. |

|

Scalability |

Scales fast. |

Scales 5 times faster than Kubernetes. |

|

Load Balancing |

Manual support needed for load balancing traffic between containers & pods. |

Does auto load balancing of traffic between containers in clusters. |

|

Data volumes |

Can only share storage volumes with containers in the same pod. |

Can share storage volumes with other containers. |

|

Rolling updates and rollbacks |

Does rolling updates and automatic rollbacks. |

Can do rolling updates but no automatic rollbacks. |

|

Logging and monitoring |

Has in-built tools to perform logging and monitoring. |

Requires 3rd party tools like ELK stack to do logging and monitoring. |

Or

Container orchestration is a process of managing the life cycles of containers more specifically in large & dynamic environments. All the services in the individual container are in synchronization to fulfill the needs of the server. Container orchestration is used to regulate and automate tasks such as:

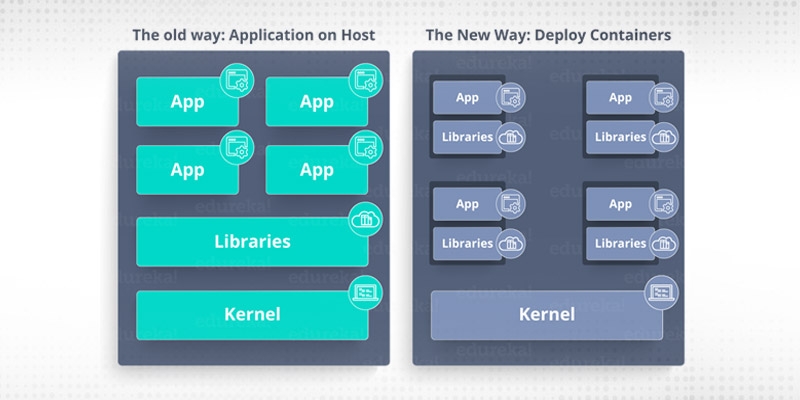

When you deploy the application on hosts:

There will be an operating system and that operating system will have a kernel which again will have diverse libraries (installed on the operating system) that are required for the application.

In this kind of framework, you can have several applications and you will see all the applications sharing the libraries present in the operating system.

When you deploy an application on the container:

In this architecture, you will have a kernel which will be the only common thing between all the applications.

Here you will see every application has their necessary libraries and binaries isolated from the rest of the system, which cannot be approached by any other application.

Like if one app needs access to Python, that particular app will get it, if the particular application needs access to Java, then only that particular app will have access to Java.

Automated Scheduling - Kubernetes has an advanced scheduler that can load containers on cluster nodes.

Self-healing capacities - Can restart, reschedule, or replace containers that are dead.

Horizontal scaling and Load balancing - Kubernetes can scale and descale application as per requirements.

Automated rollouts and rollbacks - Can do automated rollbacks and rolling updates for the containerized applications.

Kubernetes cluster is a set of nodes used for running containerized applications, so when you are running Kubernetes, you are running a cluster. A cluster contains a control plane & one or maybe more than one compute machines/nodes.

The control plane is used to maintain the desired state of the cluster, such as which applications are running or which container images they use.

Whereas, the nodes run the applications and the workloads.

Clusters are the heart of Kubernetes that gives the ability to schedule and run the containers across a group of machines - physical, virtual, on-premise, or in the cloud. Kubernetes containers aren’t tied to any particular machines, they are abstracted across the cluster.

Heapster is a performance monitoring & metrics collection system which is compatible with Kubernetes versions 1.0. 6 & above. It not just allows the collection of performance metrics regarding your workloads, containers, pods, but also collects events and other signals generated by the cluster.

Google Container Engine or GKE is an open-source cluster management and orchestration system that runs and maintains Docker containers and clusters. GKE helps to schedule the containers into a cluster and further automatically manages them based on desired requirements or stipulations.

Kubectl is the platform with which you can pass commands to the cluster. It provides the command-line interface to run commands to the Kubernetes cluster with multiple ways of creating & managing the Kubernetes component.

Minikube is a tool used for easy running Kubernetes locally, it runs a single-code Kubernetes cluster within a virtual machine.

Kubelet is an agent service which runs on each node, it works on the description of containers given in the PodSpec and makes sure the described containers are running well.

A cluster of containers of applications running across multiple hosts requires communications. To make the communication happen, we require something that can scale, balance, and monitor the containers. As Kubernetes is an anti-agnostic tool that can run on any public to a private provider, it is the best choice that can simplify the containerized deployment.

Node provides important services to run pods. Nods are also named as minions which depending on the cluster can run on any physical or virtual machine.

Node is the main worker machine in Kubernetes and master components manage every node in the system.

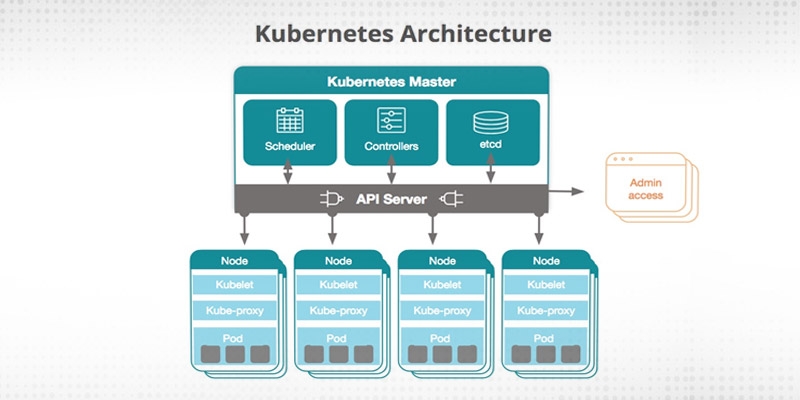

The “master node” and the “worker node” completes the Kubernetes architecture. Both of them carry in-built multiple services within them.

The master node has services like Kube-controller-manager, Kube-scheduler, ETCD, and Kube - apiserver.

The worker node has services like container runtime, Kubelet, and Kube-proxy.

DevOps Training & Certification Course

Now we will look into the questions that are related to the architecture of Kubernetes.

Kube-proxy is an important component of any Kubernetes deployment. It is an implementation of a network proxy and a load balancer and gives support to services abstraction along with other networking operations. Its job is to route traffic to the right container based on IP & the port number of the incoming request.

Kube- Episerver

Kube-scheduler

Different controller processes run on the master nodes which are compiled together to run as a single process which is “Kubernetes Controller Manager”. Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes. A control loop is a non-terminating loop that controls the state of the system.

In Kubernetes, a controller is a control loop that sees the shared state of the cluster via an api server and makes changes in an attempt to move the current state towards the desired state.

Controllers that ship with Kubernetes is the - replication controller, namespace controller, endpoints controller, and service accounts controller.

ETCD is an open-source distributed key-value store written in Go programming language which is used to hold and manage the important information which distributed systems need in order to keep running.

It manages the state data, configuration data, metadata for Kubernetes.

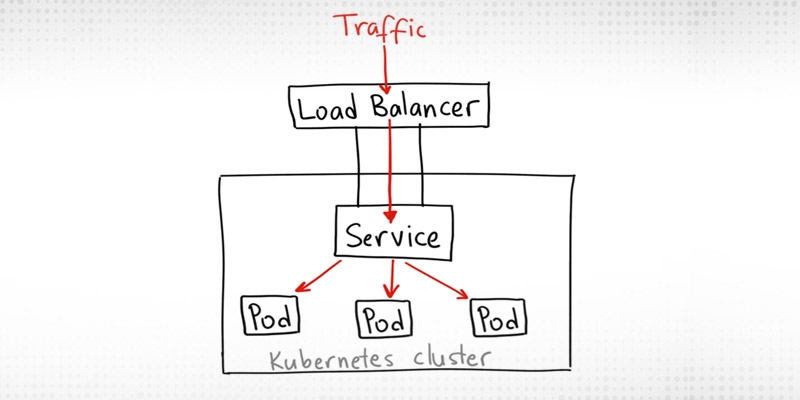

The load balancer in Kubernetes helps to efficiently distribute network traffic among diverse backend services, it is a critical strategy that maximizes scalability and availability. It routes requests to clusters to optimize performance and ensure the reliability of your application.

There are two kinds of the load balancer - external load balancer and internal load balancer:

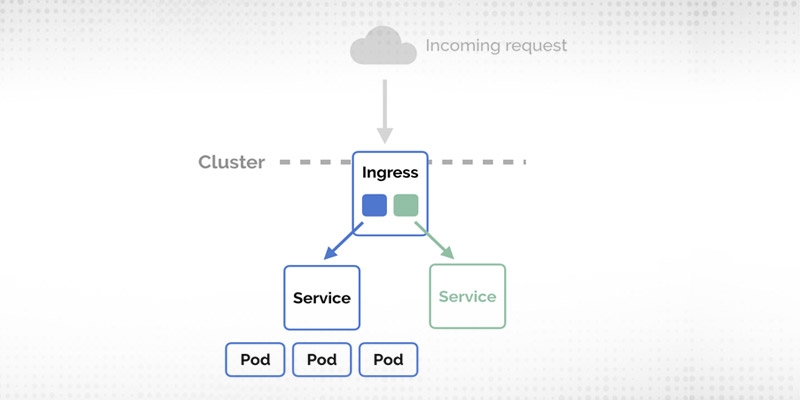

Let’s understand the working of the Ingress network with a detailed example:

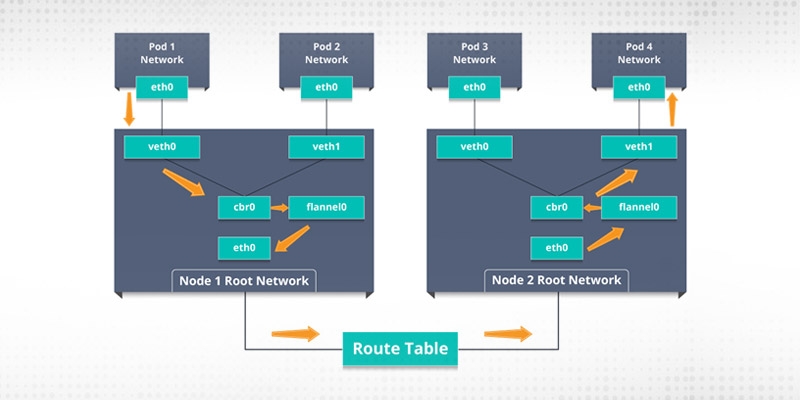

Look at the following chart, suppose we want the packet to flow from pod1 to pod 4.

We have four types of services in Kubernetes:

Headless Service is similar to ‘Normal’ services but doesn’t have any Cluster IP. It allows you to directly reach the pods without needing any proxy to reach them.

Kubernetes factorizes the management of the cluster by curating the abstraction at different levels like pods, containers, services, and the whole cluster. Each level now can be monitored and this process is termed as Container resource monitoring.

Cloud Controller Manager has to ensure consistent storage, abstract the cloud-specific code from the Kubernetes specific code, network routing, and manage the communication with the cloud services.

All these can be split into different containers (it depends on which cloud platform you are using) and this further allows the Kubernetes and cloud vendors code to get developed without creating any inter-dependency. So, the cloud vendor develops its code and connects with the cloud-controller-manager while running the Kubernetes.

There are 4 types of cloud controller managers:

Both replica set and replication controller ensure that the given number of pod replicas are running at a given time. But the only point of difference between them is, replica leverages set-based selectors, while the replication controller uses equity-based controllers.

|

Selector-based Selectors |

Equity-Based Selectors |

|

It filters the keys according to a set of values. The selector based selector locks for pods whose label is mentioned in the set.

Like if you have a label key that says app in (Nginx, NPS, Apache), if you have an app equal to any of these nginx, NPS, or Apache, the selector will declare it as True. |

It filters by both label keys and values. The equity-based selector looks for the pods that have the exact phrase as mentioned in the label.

Like if you have a key, app=nginx, this selector will look for the pods with a label as app equal nginx. |

The Multiple Kubernetes clusters can be controlled/managed as a single cluster with the help of federated clusters. You can generate multiple Kubernetes clusters within a data center/cloud and use federation clusters to control/manage all of them in one place.

The federated clusters can achieve this by doing the following two things.

Here are a few ways to ensure security while using Kubernetes:

These are quick MCQ questions that recruiters can use as a refresher during your interview. (Answers are at the bottom of this section)

|

Questions |

Choices |

|

|

|

2. The handler invoked by Kubelet to check if a container’s IP address is open or not is? |

|

|

3. What did the 1.8 version of Kubernetes introduce? |

|

|

4. How to define a service without a selector? |

|

|

5. What are the responsibilities of a Replication Controller? |

|

|

6. What are the responsibilities of a node controller? |

|

|

7. The Kubernetes network proxy runs on which node? |

|

|

8. Which of the following are core Kubernetes objects? |

|

|

9. Which of them is a Kubernetes Controller? |

|

|

10. Kubernetes cluster data is stored in which of the following? |

|

Answers - 1 - b, 2 - c, 3-c, 4-c, 5-d, 6-d, 7-c, 8-d, 9-d,10-c.

This section will tell you certain scenarios that recruiters can put during the interview to check your intellect and practical field knowledge with response speed.

Scene 1 - Imagine an MNC with a very much distributed system with a large number of data centers, VMs (Virtual Machines) and have employees working on multiple tasks.

How do you think such a company manages all the tasks systematically via the Kubernetes tool?

MNCs create & launch thousands of containers along with tasks running across vast nodes in a distributed system, around the world. In such an event, they need to use some platform that could give them scalability, agility, and DevOps practice for cloud-native applications.

They can use Kubernetes to customize their scheduling architecture & give support to multiple container formats. Kubernetes makes it possible to create a linking between container tasks and helps in achieving efficiency with great support for container storage and container networking solutions.

Scene 2 -Imagine there is a company built on monolithic architecture handling so many products. Now, in today’s scaling industry when they are trying to expand their business, their monolithic architecture has started causing problems.

So how do you think the company can shift from monolithic to microservices & launch their services containers?

They can put each of these built-in microservices over the Kubernetes platform. They can start by migrating their services once or twice first & analyze or monitor them to make sure it is running stable. And once they feel everything is going great, then they can think of migrating the rest of the application into the Kubernetes cluster.

Scene 3 - Consider a company that wants to increase its speed & efficiency of its technical operations and that too with minimal costs.

What do you suggest, how can the company try to achieve this?

DevOps Training & Certification Course

The company can opt for DevOps methodology by constructing a CI/CD pipeline, but configurations can take time to go up and run. After the CI/CD pipeline, the company can/should plan to work in the cloud environment.

While working in a cloud environment, they can think of scheduling containers on a cluster and orchestrating it with the Kubernetes tool. It will help the company to reduce its deployment time (as the company wanted speed), and will also help to reach across various environments faster and efficiently.

Scene 4 - Imagine a company wants to update its deployment methods & wants to now build a platform that is much more responsive & scalable.

How do you think this company can get ahead with this to surpass its customers’ expectations?

The company should move from their private data centers (if having any) to any cloud environment, let’s say AWS. Not just that, but they should also deploy the microservice architecture so that they can use Docker containers. Once their base framework is ready, then they can start using the Kubernetes, the best orchestration platform available. This would allow the teams to become autonomous in building and delivering applications very quickly and scalably.

Scene 5 - There is an MNC company with a very much distributed system, who wants to solve their monolithic code base problem.

What solution would you suggest to the company?

I would suggest they should shift their monolithic codebase to a microservice design and then there every microservices can be looked as a container. And further, they can deploy and orchestrate these containers with the help of Kubernetes.

Scene 6 - A company wants to optimize the distribution of its workloads by adopting new technologies.

How do you think this company can do the distribution of resources so efficiently & speedily?

They should use Kubernetes. Kubernetes helps in optimizing the resources efficiently, as it uses only those resources which are needed by that particular application. Since Kubernetes is the best container orchestration tool, the company can achieve the desired distribution of resources efficiently with this tool easily.

Scene 7 - When we shift from monolithic to microservices, the development side problems reduce but increase at the deployment side.

How do you think any company can resurrect that?

The team can use container orchestration platforms like Kubernetes & run it in data centers. It will help the company to generate a templated application and deploy it within like 5 minutes and will have actual instances containerized in the staging environment. There will be dozens of microservices running in parallel to enhance the production rate and even if a node goes down, it will be rescheduled immediately without impacting the performance.

Scene 8 - There is a company that wants to provide all the required handouts to its customers.

How do you think the company can dynamically do this?

The company can use Docker environments to compile a cross-sectional team for building a web app using Kubernetes. This framework will help the company get the required things into production in a lesser time frame. And with such a machine running, the company can provide the required handouts to all the customers having various environments.

Scene 9 - There is a carpooling company that wants to increase their servers along with scaling their platform.

How do you think the company will achieve that?

The company can go for containerization. Once they deploy their application into containers, they can take the help of Kubernetes for orchestration and make use of container monitoring tools to monitor the actions in containers.

The use of containers will help them achieve better capacity planning in the data center because they will now have fewer hindrances due to the abstraction between the hardware and services.

Scene 10 - There is a company that wants to run multiple workloads on diverse cloud infrastructure from a single-tenant physical server (bare metal server) to a public cloud.

How do you think this company can do this by having different interfaces?

The company can turn to microservices and further adopt the Kubernetes orchestration tool. The tool will support the company in running multiple workloads on diverse cloud infrastructures.

In this article, we have listed top Kubernetes interview questions from basics to advanced in 4 different interesting formats. These interview questions on the Kubernetes application, the architecture will help you get a base for interview preparation. Prepare them thoroughly, revise them, and you will be good to go.

And, if you are a self-taught learner, it is better to take a Kubernetes certification training online as it will better help you ingrain the concepts & topics around this tool and job of Kubernetes professionals, as well as competent Kubernetes certifications. During the training, you will even have access to more tentative Interview questions on Kubernetes (commonly asked ones by top tier companies).

How about starting with our Kubernetes Training’s free demo today!

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Interviews