02

JanChristmas Offer : Get Flat 50% OFF on Live Classes + $999 Worth of Study Material FREE! - SCHEDULE CALL

Hadoop is Java-based programming framework which is open source and it facilitates the dispensation and availability of storage space for extremely large data sets in a scattered counting and computing environment. It is an integral part of the Apache project which has been sponsored by the Apache Software Foundation. The market survey shows that the average salary of Big Data Hadoop Developers is around $135K. Government analysts have predicted that the requirement for Big Data Managers would grow to a daunting 1.5 million figure by the year end of 2018. To help you build a career in Hadoop you need to first get yourself a job and we will help you with that. Our team has prepared a list of some of the most frequently asked questions in an interview of Hadoop.

For the Big Data professionals who are going to attend Hadoop interview recently, here is a list of the most popular interview questions as well as their relevant answers that will help you in your interview a lot. Over here, we have included the top frequently asked questions with answers to help the freshers as well as the experienced professionals in the field.

When “Big Data” appeared as problematic, Apache Hadoop changed as an answer to it. Apache Hadoop is a context which offers us numerous facilities or tools to store and development of Big Data. It benefits from analyzing Big Data and creation business decisions out of it, which can’t be done professionally and successfully using old-style systems.

With Hadoop, the employer can run requests on the systems that have thousands of bulges scattering through countless terabytes. Rapid data dispensation and assignment among nodes helps continuous operation even when a node fails to avert system let-down.

Inscribed in Java, Hadoop framework has the competence of resolving questions involving Big Data analysis. Its program design model is based on Google MapReduce and substructure is based on Google’s Big Data and dispersed file systems. Hadoop is ascendable and more nodes can be implemented to it.

Read: Key Features & Components Of Spark Architecture

The minute’s amount of data that can be delivered or written is largely mentioned to as a “block” in HDFS. The defaulting size of a block in HDFS is 64MB.

Block Scanner is something that pathways the list of blocks contemporary on a Data Node and confirms them to find any kind of checksum blunders. Block Scanners use a regulating device to standby disk bandwidth on the data node.

The procedure by which the system under analysis performs the sort along with the transfers which the map outputs to the given reducer as inputs are known as the shuffle in MapReduce.

Distributed Cache feature of MapReduce framework is very important. When you wish to share any of the files across all the nodes in a given Hadoop Cluster, Distributed Cache is used for that.

Heartbeat concept is referred to the signal which is used between a data node and a Name node, and also between task tracker as well as the job tracker, in case either the Name node or job tracker does not respond well to the signal sent, then it is automatically considered that there is some issue with the data node or the task tracker.

Read: An Introduction and Differences Between YARN and MapReduce

The keen answer to this query would be, DataNodes are product hardware like individual computers and laptops as it supplies data and is compulsory in a big number. But from your knowledge, you can tell that NameNode is the chief node and it supplies metadata about all the chunks stored in HDFS. It needs high memory (RAM) space, so NameNode desires to be a high-end mechanism with decent memory space

NameNode occasionally obtains a signal from each of the DataNode in the bunch, which suggests DataNode is operative properly. A block report comprises a list of all the chunks on a DataNode. If DataNode flops to send a signal message, after an exact period it is noticeable dead. The NameNode duplicates the blocks of the dead node to additional DataNode using the imitations created earlier.

HDFS supports high-class writes only. When the primary client associates the “NameNode” to sweeping the file for writing, the “NameNode” allowances a tenancy to the client to create this file? When another client stabs to open the same file for lettering, the “NameNode” will sign that the lease for the file is previously granted to the additional client, and will cast-off the open request for the additional client.

In HDFS Data Blocks are dispersed across all the machinery in a cluster. Whereas in NAS data is stored on an enthusiastic hardware.

Checkpoint Node retains track of the up-to-date checkpoint in a directory that has the same erection as that of NameNode’s directory. Checkpoint node produces checkpoints for the namespace at stable intervals by moving the edits and fs image file from the NameNode and integration it locally. The new-fangled image is then again modernized back to the active NameNode.

Read: What Is Apache Oozie? Oozie Configure & Install Tutorial Guide for Beginners

BackupNode: Backup Node also delivers checkpointing functionality like that of the checkpoint node but it also preserves its up-to-date in-memory print of the file structure namespace that is in sync with the vigorous NameNode.

The finest formation for performing Hadoop jobs is double core machines or dual mainframes with 4GB or 8GB RAM that practice ECC memory. Hadoop extremely assistances from using ECC recollection though it is not low – end. ECC memory is suggested for running Hadoop since most of the Hadoop users have skilled various checksum faults by using non-ECC memory. Though, the hardware formation also is subject to on the workflow necessities and can change consequently.

The actual function of MapReduce partitioner is to ensure that all the specified values of a single key go to the same reducer, sooner or later which helps in an even distribution of the map output over the output of the reducer.

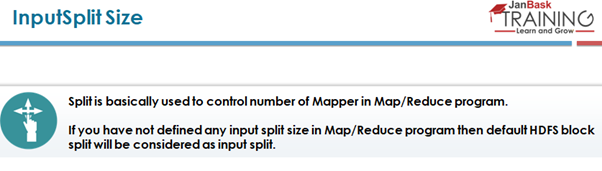

The Logical division of data in Hadoop framework is known as Split whereas the physical division of data in Hadoop is known as the HDFS Block.

In text input format, each and every line in the text file is a valid record. In Hadoop, environment value is the content of a line under process whereas the key is the byte offset of the same line.

Read: Top 45 Pig Interview Questions and Answers

Pinterest

Pinterest

Email

Email

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on Hadoop Course

Interviews