16

JanNew Year Special : Get 30% OFF + $999 Study Material FREE - SCHEDULE CALL

You might be reading this post because you are interested in learning Apache Oozie or planning to enroll for Oozie certification course for a remarkable career growth. If yes, you have reached the right place today where you would learn about the Oozie basics like what is Oozie, how it works, Oozie features & benefits, and Oozie installation etc.

Apache Oozie is basically a scheduler system that is used in Hadoop System for job scheduling. Here, you might be thinking what is job scheduling and why is it important? Job scheduling is responsible to schedule the jobs that are involved in any task of Hadoop Ecosystem.

What is Hadoop Ecosystem?

Hadoop Ecosystem is basically a Hadoop framework that is used to solve big problems. Hadoop Ecosystem comprises of many services like storing, ingesting, analysing, and maintaining etc. These services work in a collaborative manner to perform any task. In a Hadoop Ecosystem, there is a big role of Hadoop components that are listed below.

Here, this is clear that Apache Oozie is a part of Hadoop Ecosystem and it is used for job scheduling. Job scheduling can be required in case when two or more jobs are dependent on each other. For e.g. a MapReduce job has to be transferred to Hive for further processing or in other example job scheduling may be required when any particular job has to be executed at a certain time. Like a number or set of jobs have to be executed either weekly or monthly or at the time when data become available.

Apache Oozie can easily handle such scenarios and schedule the jobs as per the requirement. This blog covers the basics of Apache Oozie, job types, and execution using this beneficial tool.

Scheduler system Apache Oozie is used to manage and execute the Hadoop jobs in a distributed environment. User or developer can combine various types of tasks and create a separate task pipeline. These tasks can belong to any of the Hadoop components like Pig, Sqoop, MapReduce or Hive etc. Through Apache Oozie, you can execute two or more jobs in parallel as well.

Oozie is a reliable, scalable, and extensible scheduling system. It is nothing but only a Java web-application that can trigger the workflow actions and uses Hadoop execution engine for task execution.

Read: Top 30 Splunk Interview Questions and Answers

Task completion is reported through polling and call back in Oozie. At the time of task initialization, Oozie provides a unique ‘call back HTTP URL’ to the task, the notification is sent to that URL when the task completes. In case, if the task could not invoke the call back URL then the task is polled by

Oozie executes following three job types:

Oozie executes following three job types:

Hadoop that is an open source framework uses Oozie for job scheduling purpose. Organizations are using Hadoop to handle big data related tasks. In big data analysis, multiple jobs are created during the analysis process, so it becomes necessary to process these jobs effectively and this is where Oozie comes into action.

Just like Hadoop, Oozie is also an open source project that makes the workflow simpler and provides a convenient coordination between several jobs. Oozie can help the Hadoop developers in defining different jobs or actions and interdependency between these jobs. Oozie can perform relevant dependency action while controlling and scheduling the jobs.

DAG or direct acyclic graphs is an in-built Oozie process that is used by the programs to define the action. DAG is a graph without any cycle. So, every task has a separate start and end point without any loop. DAG processes involve action nodes and various dependencies that may have a different starting point and end point where starting point will not come back in the path.

Read: What Is The Working Philosophy Behind Hadoop MapReduce?

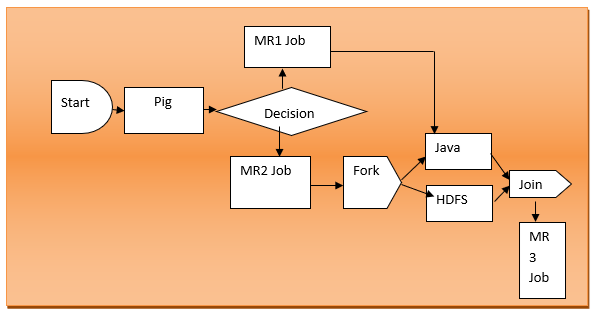

Oozie can handle various types of tasks and can make them as action node, these jobs may include MapReduce, a Java app, a Pig application or a file system job. Node elements represent the flow control in DA graphs. Node elements of DAG function as per the logic that works as input for this node and is generated by the preceding job. These flow control nodes of Oozie can be forks, join nodes, or decision nodes. The following figure shows an example of Oozie workflow application:

Oozie workflows are a collection of different types of actions like Hive, Pig, MapReduce, and others. These jobs are arranged on DAG in which different action sequences are defined and executed. The DAG is usually defined through XML language to process ‘hDPL’ that is a compact language. A minimal amount of action nodes and flow nodes are used to define these tasks and actions. Control nodes of DAG are used to specify flow execution for start, marketing, fail, or endpoint nodes. Execution path is also defined by the fork node, decision node, and the join node.

In DAG, the action nodes act like necessary triggers that can initiate the action execution, when the required condition is met. Oozie can map and controls following types of actions like Hadoop mapreduce actions, Pig map actions, Java sub workflow actions, and Hadoop file system actions consecutively. Further, we will discuss one step by step Oozie installation guide for beginners.

The machines that already have Hadoop framework installed can also have Oozie and for that, they will have to use either Debian install package, RPM or a tarball. Some Hadoop installation like Cloudera CDH3 comes with pre-installed Oozie application and in that by pulling down the Oozie package through yum installation can be performed on edge node. Oozie client and server can either be set up on the same machine or two different machines as per the availability of space on the machines.

Oozie Server component has the elements that can be used to launch or control various processes or jobs, while Oozie client-server architecture of Oozie can be used to allow the user programs to launch jobs and establish communication between these client and server-based application components.

Oozie URL shell variable must also be added along with Oozie client-server components. Shell variable of Oozie is (Export OOZIE_URL=http://localhost:11000/oozie)

On Hadoop platform, Oozie runs as a service in the clusters and clients can submit the tasks as workflow definitions that can be used immediately or later. Here, Oozie workflows are consist of two types of nodes, one controls nodes and other is action nodes:

Read: HDFS Tutorial Guide for Beginner

Action nodes of Oozie workflow represent workflow tasks likes to move the file to HDFS or to run a MapReduce or Pig or Hive jobs. Control flow nodes, control the workflow execution by applying appropriate logic depending on the earlier node results. Apart from this, Start node, end node, and error nodes also there that are designated relevant jobs.

Oozie is mainly used to manage various types of jobs and tasks. Job dependencies are specified by the user in DAG form. Oozie takes care of the job execution by consuming such information that is specified in any workflow.

The user can also specify task execution frequency in Oozie, so for repetitive tasks, the frequency can be changed and specified as per their need. It basically saves the time of developers that they need to spend in managing the workflows or set of jobs. Job scheduling has become an easier task due to this tool. So, every Hadoop developer should use Oozie.

Oozie is a Java application that can run on Hadoop platform. Following features are provided by Oozie to the Hadoop developers:

We have tried to cover all of the basic aspects that are associated with Oozie. This platform or Java tool has made the job scheduling quite easier and automatic. Now developers need not be worried about job scheduling and even without any additional knowledge, they can even use the tool right from within the Hadoop framework.

Oozie has covered all of the job scheduling tasks so any type of job either of similar or different types can be scheduled by the Oozie. Hadoop has become an important platform for Big Data professionals and to make it quite convenient, Oozie has done simply a great job.

If you want to explore more about the tool then you are recommended to join Apache Hadoop certification program at JanBask Training right away.

Read: A Comprehensive Hadoop Big Data Tutorial For Beginners

Pinterest

Pinterest

Email

Email

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on Hadoop Course

Interviews