Grab Deal : Upto 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

Data transformation meaning the procedure of changing the data's original format to another. Data cleansing is a vital aspect of data warehousing, data integration, and other data management operations. Different levels of complexity are required for data transformation in data science dependent on the extent to which the data must be altered between its original and final forms.

In order to integrate, analyze, store, and otherwise make use of data, businesses often resort to data transformation to convert data from disparate sources into a common format. Data transformation may be automated with any ETL solution or performed manually with any programming language, including Python. It is not only about technology when it comes to digital transformation. It is also important to synchronize your teams and procedures in order to stimulate creativity. A Data Science Online Certification makes it simple to teach and coordinate all organizational levels.

One of the most important steps in preparing data for data transformation in data science, which creates more intuitive patterning in the data. Cleaning and making sense of messy data requires a process called "data transformation," which modifies the data's structure, format, or values.

There are several scenarios in which transforming data might be useful. What follows is a list of many of the more common ones:

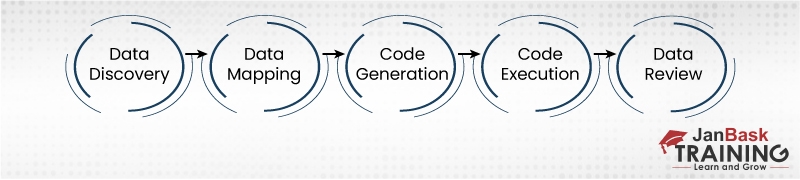

An integral aspect of ETL (Extract, Transform and Load). Data collected from the source is raw and needs adjustments before sending it to the target. The method of converting data is explored using the following steps:

1. Data Discovery: Taking this action marks the beginning of your metamorphosis. It requires recognising and comprehending information in its native format. To determine what changes should be made to the data, you may first get insight into its structure through the use of data profiling tools or a custom-written script.

2. Data Mapping: Getting data into the appropriate format involves describing how each field is manipulated (mapped, joined, filtered, updated, etc.).

3. Code Generation: Data transformation is the act of creating or creating code (in a language like SQL, R, Python, etc.) that may alter your data according to the mapping rules you specify.

4. Code Execution: The final step is running the created code against the data to produce the expected result. Either the code is seamlessly included into the transformation of the data tool, or it requires manual execution via a series of stages.

5. Data Review: The final phase of transformation focuses on ensuring that output data fits your transformation criteria. Developers and analysts are informed of any discrepancies or errors in the data.

The specifics of your Data Transformation process will be determined by a number of factors, including the use cases, however there are typically 4 stages.It's not always as simple as it seems to transform data. That's why it's important to have access to high-quality, data-safe, and compatibility-free transformation tools.

1. Data Interpretation: Identifying and realizing data in its original format requires interpretation, the initial stage in data transformation. This is crucial for figuring out what resources are available and what products can be made from them. Some data interpretation tasks may be more complex than they appear at first glance. Data Profiling tools are used for this because they provide a more in-depth look into the data storage architecture.

2. Pre-Translation Data Quality Check: This is a combining phase that helps find issues in the Source Database, such as missing or damaged data, that might cause problems throughout the Data Transformation process.

3. Data Translation: This phase is crucial to the whole transformation process. The term "data translation" is used to describe the process of swapping out certain pieces of data for new ones that are more suitable for your desired format.

4. Post-Translation Data Quality Check: This is a combining procedure that is quite similar to the Pre-Translation Data Quality Check. No assurance can be given that your data will be error-free after being translated, even if it was error-free beforehand. The Data Translation procedure is likely to introduce errors and inconsistencies into your data. Errors, omissions, and inconsistencies in the translated data may be found and corrected with the use of a Post-Translation Quality Check.

Get the required Data Science Online Certification Course and become fully prepared for these prominent Data Science Certifications online, as discussed above.

Aggregation: The term "data aggregation" refers to the procedure of collecting raw data and presenting it in a summary form for the purposes of statistical analysis. Aggregating raw data, for instance, can result in statistics such as average, minimum, maximum, total, and count. After data has been gathered and compiled into a report, it may be analyzed to reveal trends and patterns in relation to individual resources or categories of resources. There are two types of data aggregation: temporal and geographical.

Attribute Construction: This method contributes to the creation of a powerful and efficient data science projects procedure. To facilitate the mining process, new attributes are built and added from the existing set of attributes, a process known as attribute creation (or feature construction) or data transformation.

Discretization: The term "data discretization" refers to the process of giving discrete values to discrete intervals of previously continuous data attribute values. There is a wide variety of discretization techniques available, from the straightforward equal-width and equal-frequency approaches to the very involved MDLP.

Generalization: To get a more complete picture of a problem or scenario, data generalization involves creating progressively more abstract summaries in an assessment database. When it comes to online analytical processing, more generalized data is better (OLAP). OLAP is designed to speed up the resolution of analytical queries with many dimensions. As an added bonus, the method may be utilized for OTP (OLTP). One sort of database system used to manage and support transaction-oriented applications, especially those involving data entry and retrieval, is known as a "OLTP" system.

Integration: Integrating data from several sources into a single, usable format is an important part of the data pre-processing phase. A number of sources, such as databases, data cubes, or flat files, are brought together. There are two primary methods for integrating data: tight coupling and loose coupling.

Manipulation: Data manipulation refers to the process of altering or manipulating information for the purpose of better understanding and organization. Financial data, consumer behavior, and other useful insights may be gained with the use of data analysis tools, which aid in seeing patterns in the data and putting it into a format suitable for further study.

Normalization: In order to make data more suitable for processing, data normalization is performed. Data normalization's primary objective is the removal of redundant information. Its various uses include boosting the performance of data science algorithms and accelerating the extraction of relevant information.

Smoothing: Data smoothing is a technique for identifying patterns in noisy data whose form is uncertain. The method may be used to detect movements in the stock market, the economy, consumer attitudes, and so on.

Data Science Training

In most situations, raw data is not structured or arranged in a way that allows for the aforementioned applications. Some common examples of how data can be transformed to make it more instantly usable are shown below.

Revising: Values in the data are checked and reorganized to make sure they make sense for their intended purpose. Database normalization includes the process of removing duplicate and one-to-many values from a data model. Reduced storage needs and improved readability for analysts are two additional benefits of normalization. However, it's a lengthy process that requires lots of reading, analyzing, and thinking outside the box.

During data cleansing, it is common practice to convert data values to ensure they are usable in a variety of formats.

Computing: New data values are often computed from existing data in order to calculate rates, proportions, summary statistics, and other key numbers. One more is to transform media files' unstructured data into something a machine learning system can use.

Scaling, standardizing, and normalizing place values on a consistent scale, such fractions of a standard deviation, which is what Z-score normalization does. This allows for the easy comparison of various numerical values.

The term "vectorization" refers to the procedure of transforming textual information into numerical arrays. In machine learning, these adjustments are useful for many purposes, such as image recognition and NLP (NLP).

Separating: Dividing values into their constituent components allows us to distinguish between them. Data values are sometimes intermingled inside the same field due to quirks in data collecting, however this might make it difficult to do fine-grained analysis if the values aren't extracted.

When doing regression analysis, it is standard practice to split a single column into many columns in order to accommodate fields with delimited values or to create dummy variables out of columns with multiple potential category values.

Information is omitted by way of filtering on the basis of particular row values or column contents.

Combining: A common and crucial task in analytics is bringing together data from different tables and sources to paint a comprehensive picture of an organization's operations.

Joining allows you to combine information from multiple tables into one.

Merging, also known as appending or union, is the process of combining records from many tables into one. By merging the two tables based on a shared column (in this case, "email"), you may piece together elements of the sales and marketing funnel. Matching names and values for the same data piece across several tables is also an example of integration.

Data transformations may be divided into two categories:–

Traditionally, batch operations are used to convert data. Using a data integration tool, you must run code and apply transformation rules to your data. The term "micro batch" describes a method for processing and transmitting data with little delay. It takes very little time and effort. Traditional data transformation has been used by businesses for decades. However, its effectiveness is diminished by a number of drawbacks, including the need for human engagement, high costs, slow processing times, etc.

In order to analyze data, correct and update data with clicks, etc., businesses can use an interactive transformation that provides a visual interface. There is no need for specialized technical knowledge or experience to perform each stage of an Interactive data transformation.

It reveals user trends and outliers in the dataset to eliminate inaccuracies. The time spent on data preparation and transformation is cut in half with interactive data transformation since it does not necessitate the assistance of a developer. It enables business analysts to have complete command over the data they work with.

To get the full benefits of their data collection, businesses need to undergo data transformation. Benefits to this should be discussed.

Data transformation is an important step in the data analysis process that involves changing and modifying data from one form to another so that it may be analyzed. Filtering, combining, cleaning, and formatting data, as well as computing new variables or aggregates, may be included.

The goal of data transformation is to guarantee that the data is in a usable format, which can aid in the identification of patterns, linkages, and insights that were not visible in the original dataset. The procedure can also assist in identifying and correcting data mistakes, which is crucial for reliable analysis.

In conclusion, data transformation is an important step in the data analysis process that involves modifying and changing data to make it acceptable for analysis. You can check out the data science certification guide to understand more about the skills and expertise that can help you boost your career in data science and data transformation in data mining.

Basic Statistical Descriptions of Data in Data Mining

Rule-Based Classification in Data Mining

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Download Syllabus

Get Complete Course Syllabus

Enroll For Demo Class

It will take less than a minute

Tutorials

Interviews

You must be logged in to post a comment