Grab Deal : Upto 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

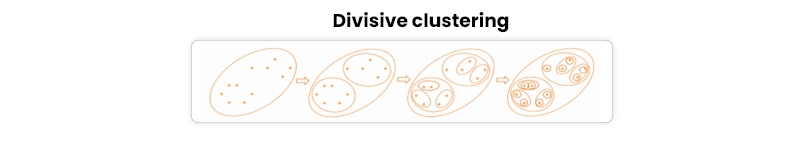

Clustering is a popular technique used in data science to group similar objects together based on their characteristics. It helps identify patterns and relationships within the data, which can be useful for various applications such as customer segmentation, image recognition, and anomaly detection. One of the clustering techniques that are widely used in hierarchical clustering is divisive clustering. In this post, we will discuss divisive hierarchical clustering - its definition, advantages, disadvantages, and how to implement it using Python. Before diving into divisive clustering and learning more about its importance in data science or data mining and key takeaways. You should check out the data science tutorial guide to understand basic concepts.

Divisive hierarchical clustering is a popular technique in various fields, such as biology, computer science, and data mining. This method is advantageous when dealing with large datasets where it may not be feasible to group objects based on their similarities or differences manually.One of the primary advantages of divisive hierarchical clustering is that it provides a complete hierarchy of clusters, allowing for a more detailed analysis of the relationships between different objects. Additionally, this method can handle different data types, including numeric values and categorical variables.

The algorithm for divisive hierarchical clustering involves several steps.

Step 1: Consider all objects a part of one big cluster.

Step 2: Spilt the big cluster into small clusters using any flat-clustering method- ex. k-means.

Step 3: Selects an object or subgroup to split into two smaller sub-clusters based on some distance metric such as Euclidean distance or correlation coefficients.

Step 4: The process continues recursively until each object forms its own cluster.

For example, suppose we have a dataset of customer information such as age, income level, and purchase history. Using divisive hierarchical clustering, we could group customers based on their similarities in these attributes to identify potential target markets for marketing campaigns or product development efforts. If you are interested in a career path for data science, we have a complete guide to help you with your new career opportunities and growth.

To implement divisive hierarchical clustering using Python, we can use the SciPy library, which provides a function called "linkage" that performs hierarchical clustering. Here's an example code snippet:

Divisive hierarchical clustering is a clustering algorithm that divides a dataset into smaller subgroups or clusters based on certain criteria. There are numerous advantages to using this technique in hierarchical clustering.

Divisive Clustering in data science is an effective technique of clustering, but it comes with its own limitations.

Data Science Training For Administrators & Developers

Divisive clustering is a powerful technique for grouping similar objects together based on their characteristics. It provides a clear hierarchy of clusters at different levels of clustering techniques with large datasets. However, it can be computationally expensive when dealing with large datasets and requires a careful selection of distance metrics to achieve optimal results. By implementing this algorithm in Python using the SciPy library, we can easily perform divisive clustering on our data and gain valuable insights into its structure and relationships. If you’re looking to improve your skill sets or begin your career in the world of data, you may enroll yourself in some of the top data science certification courses.

Basic Statistical Descriptions of Data in Data Mining

Rule-Based Classification in Data Mining

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Download Syllabus

Get Complete Course Syllabus

Enroll For Demo Class

It will take less than a minute

Tutorials

Interviews

You must be logged in to post a comment