Grab Deal : Flat 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

The history of Deep Learning traces its roots back to the 1940s, with pioneers like Warren McCulloch and Walter Pitts. They proposed an artificial neuron model, laying the groundwork for neural network research. Alan Turing theorized the concept of machine learning in the 1950s. Still, it wasn't until the 1960s that Soviet mathematicians realized it through the development of neural networks, eventually paving the way for a great deep-learning timeline to be formed.

The neural network history, a cornerstone of deep learning, begins with the early theoretical models of the brain's neural structure. In the 1940s, Warren McCulloch and Walter Pitts introduced the first concept of an artificial neuron, proposing a simplified model of human brain cells that could perform essential logical functions.

In the late 1950s, Frank Rosenblatt developed the Perceptron, a significant advancement in neural network research. The Perceptron was an early neural network capable of simple pattern recognition tasks, marking the first instance of an algorithm learning from data.

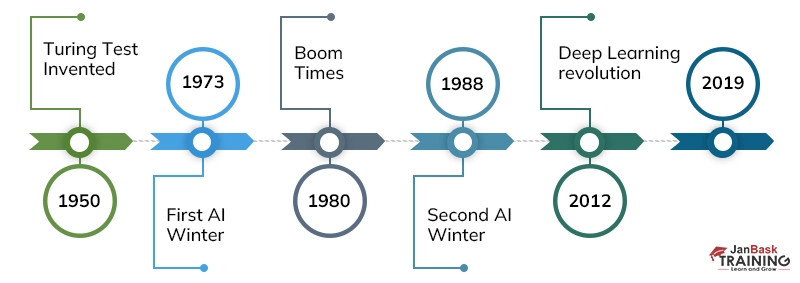

Despite these early successes, neural network research faced challenges. In 1969, Marvin Minsky and Seymour Papert highlighted limitations in the Perceptron model, particularly its inability to solve problems that are not linearly separable. This critique led to reduced funding and interest in neural network research, a period often referred to as the first AI winter.

Since the 1990s, the field has seen rapid advancements, including the developing of various neural network architectures such as Convolutional Neural Networks (CNNs) by Yann LeCun and Recurrent Neural Networks (RNNs). The introduction of Long Short-Term Memory (LSTM) networks in 1997 by Juergen Schmidhuber and Sepp Hochreiter further revolutionized the field, particularly for tasks involving sequential data like speech and text.

Today, neural networks form the backbone of many deep learning applications, from image and speech recognition to natural language processing. The history of neural networks and its ongoing research is focused on making these networks more efficient, interpretable, and capable of learning with less data, paving the way for more innovative and effective solutions in various domains.

The 1970s marked a pivotal era with the development of backpropagation, crucial for training deep learning models. This technique, however, wasn't applied to neural networks until 1985 when Hinton, Rumelhart, and Williams demonstrated its effectiveness. In 1989, Yann LeCun provided the first practical demonstration of backpropagation at Bell Labs, using it to read handwritten digits.

During the 1990s, significant advancements were made despite the second AI winter. In 1995, Vladimir Vapnik and Dana Cortes developed the support vector machine. Long Short-Term Memory (LSTM) for recurrent neural networks was introduced by Juergen Schmidhuber and Sepp Hochreiter in 1997.

The turn of the millennium brought the Vanishing Gradient Problem, which was identified in 2000. This issue was mitigated mainly by advancements such as ReLU activation functions. The launch of ImageNet by Fei-Fei Li in 2009 provided a substantial database for neural network training.

By 2011, the rapid advancement of GPU technology made it feasible to train convolutional neural networks more efficiently. Google Brain's Cat Experiment in 2012 demonstrated the potential of unsupervised learning. The subsequent years have seen continual growth and innovation in deep learning, driven by increasing computational power and data availability.

Deep learning experienced a boom in the early 2010s. The pivotal moment came with AlexNet's victory in the 2012 ImageNet competition. This GPU-implemented CNN model, designed by Alex Krizhevsky, showcased deep learning's potential in image classification with an accuracy of 84%, a substantial leap over previous models. This victory sparked a global deep-learning boom.

2014 witnessed the birth of Generative Adversarial Networks (GANs) by Ian Goodfellow.

GANs represented a novel approach to deep learning, capable of synthesizing realistic data. Their applications extended across various domains, from fashion to art and science, opening new doors for the utility of deep learning.

Deep learning also significantly impacted the field of reinforcement learning. Researchers like Richard Sutton led the way, contributing to the success of game-playing systems developed by DeepMind. These systems demonstrated AI's ability to learn and adapt in complex, dynamic environments.

Stanford University's One Hundred Year Study on Artificial Intelligence, founded by Eric Horvitz, is another testament to the growing significance of deep learning. It builds on longstanding AI research and provides a structured overview of AI's progression and future trajectory.

Deep learning continues to evolve rapidly. One of the current focuses is on unsupervised and semi-supervised learning methods, addressing the challenges of data labeling and efficiency. There's also a significant emphasis on making deep learning models more interpretable and transparent, an essential factor for their adoption in critical applications like healthcare and autonomous driving.

Another area of interest is integrating deep learning with other AI techniques, such as symbolic AI, to create systems that are powerful in pattern recognition and capable of reasoning and understanding.

The evolution of deep learning has profoundly influenced education and industry. The best Deep Learning Courses online now include comprehensive modules on the latest advancements, such as GANs, reinforcement learning, and optimization techniques. Deep Learning with Python has become famous for those looking to implement these advanced algorithms practically.

The history of deep learning is a story of transformation and continual advancement. From its early days as a theoretical concept to its current status as a critical technology driving innovation across industries, deep learning's journey is marked by groundbreaking discoveries and persistent challenges. The field promises to unveil even more sophisticated and impactful applications as we move forward, fundamentally altering our interaction with technology and data.

So, what are you waiting for? It’s time to join the deep learning timeline with our exclusive and exciting Deep Learning Courses & Certification Training.

Basic Statistical Descriptions of Data in Data Mining

Rule-Based Classification in Data Mining

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Download Syllabus

Get Complete Course Syllabus

Enroll For Demo Class

It will take less than a minute

Tutorials

Interviews

You must be logged in to post a comment